The funky Machine Learning Robot

from hackster.io

Engineer James Bruton is known for his many robotics creations. His Performance Robots, for example, can sometimes be spotted at events, where attendees can interact with them via highly accessible interfaces featuring large, colorful buttons, knobs, and floor pressure pads. Tapping away at these buttons will make the robots dance about and otherwise entertain an audience.

In the present environment, having large crowds sharing a touch-based interface is not entirely practical or desirable given concerns about the possible spread of infectious disease. However, Bruton still wanted to see the Performance Robots in action, so he came up with a new idea for the interface — a hands-free computer vision-based approach.Bruton started with a NVIDIA Jetson Nano, with a Raspberry Pi camera module.

Images are captured with the camera, then the Jetson runs inference against a PoseNet model, which is able to detect 17 body, arm, facial, and leg keypoints. These keypoints are then analyzed by a Python script that translates them into recognizable gestures.

The Performance Robots are controlled via the DMX protocol, which is usually used to control movable light fixtures. This allows the robots to be controlled with an open source software package called Q Light Controller Plus (QLCP), which simplified their operation. In order to programmatically interface with QLCP, the Open Sound Control network protocol was employed, with details handled by the python-osc library.

With this setup in place, Bruton was able to trigger the robots to make certain actions by sending OSC messages to QLCP when particular gestures were recognized. These actions were accomplished by coordinating the movements of several servos that were fitted into the joints of the robot.

Bruton also added a very nice touch by including an algorithm that smooths servo movement speeds, such that the robot’s actions seem natural, rather than abruptly starting and stopping.To make the software work in real world situations, where there may be a crowd of people around the intended operator, it was necessary to find a way to identify which person should have control of the robot.

Bruton wanted the person closest to the camera to be in control, so used a relatively simple trick to ensure that this is the case. The person with the largest distance measured between their eyes is presumed to be the closest, and they are given control.

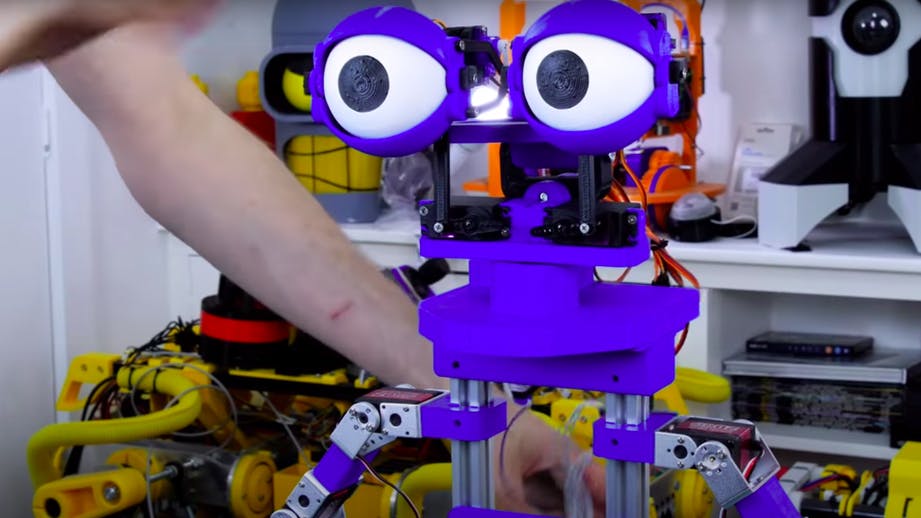

The Performance Robots are in storage at present, so a more recent robot creation was used to test out the method. The end result is a cute little purple frog-like robot with some predefined dance moves that run in a loop when triggered.

When the operator lifts their left arm, for example, the robot does as well, but along with some smooth wave-like actions that turn it into a dance move.

The code and CAD files are available on GitHub, and provide an excellent example of how to turn machine learning-based gesture recognition into actions in the real world.

Finally - to keep up to date with interesting news, offers and new products - interact with us on facebook, instagram, and twitter.

Leave a comment